My engine runs in two parts - one is javascript/WebGL, and the other is C++/OpenGL. These two parts communicate via TCP/Websocket. This probably seems like more work than it is worth, but I have found it to be invaluable. Javascript is good, but is not a silver bullet and there is a good reason the majority of games are still written in C++. However, javascript does allow me to handle all of that high-level, non-performance-critical stuff much more easily than C++. It has other advantages like instant code changes via console scripting or page refresh. It also makes modding the engine really easy for other people -- no need to mess with compilers, just change some .js files.

The javascript side handles mostly the interface type stuff, both in game (like inventory/stat screens) and the tools used to modify the game environment. It might seem like it would bog down a system to run two OGL instances at once, but actually I coded it such that the browser halts rendering when you mouse out of it, or unless it gets an update message. But even with that said my real intention was to be able to run the browser portion on any device (i.e. a tablet -- there are already tablets that can run WebGL, some much better than others), and free up the rest of the system to render the game environment. There is no theoretical limit to the number of clients, so in theory you could run the editor across many computers/devices at once (synching is very easy, just broadcast messages).

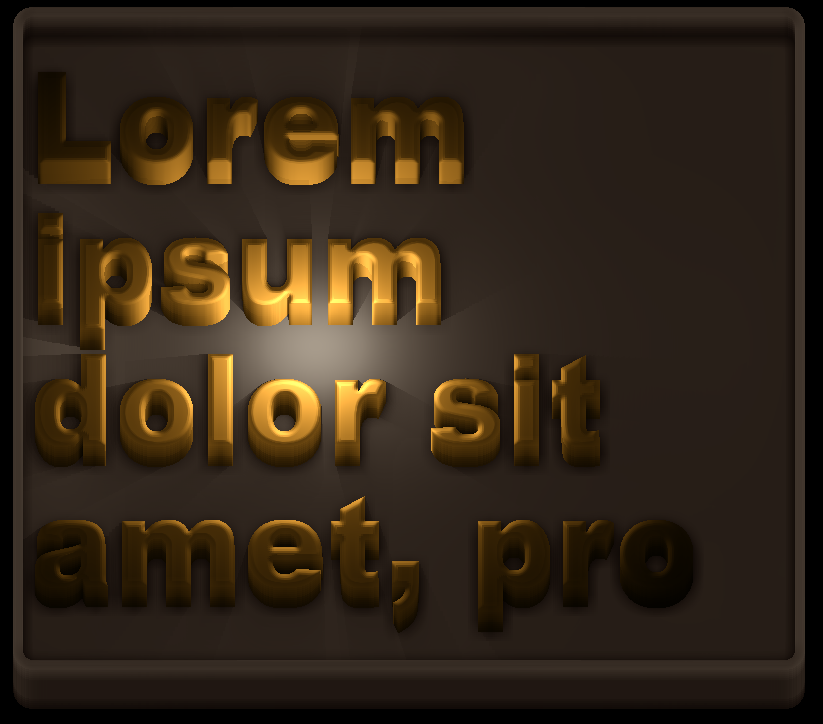

Because the browser side is used to modify many of the resources like materials and shaders, it is best to be able to preview that stuff in the same screen. It might seem a bit crazy to create a text renderer from scratch in a web browser, but I wanted it to look good an be responsive. Right now it still needs optimization (just in one area mostly, the ambient occlusion).

Why voxels, why not polygons? After all, three.js has a built-in example for polygon text. Well, polygon text does not scale very well - after a few hundred characters your system will not respond well...its also a lot of geometry to handle. My text is just a single quad for each letter, with some shader magic (although it does do a real rendering of volumetric data, nothing is "faked"). It does not matter how many characters you have on the screen, the only thing that effects performance is the size of the buffer the text is rendered to. It does not use any dirty tricks like rendering to a canvas, this text is all laid out in code with bitmap fonts (surprisingly fast, although like I said the AO shader is causing some lag right now). There is of course some sacrifice between "looking cool" and legibility but the engine is flexible and can render fonts in pretty much any style, even plain looking text. It supports all fonts, left/center/right alignment, text wrapping (with word breaking for continuations), any font size or scale, different styles of beveling, per-letter materials, etc. It also uses ray-cast shadows. The text is genuinely 3D, although many optimization tricks are used in light of the orthographic, fixed perspective (I figure most users wont care to rotate the text around).

This is actually the harder part of creating the GUI for my game, the layout is fairly easy (in fact I already wrote a layout engine a year ago in javascript for a canvas-based GUI, in less than a day). It will support most layout paradigms you are used to (like creating an html page basically, but in JSON). The other benefit is that this look meshes seamlessly with the rest of the game (note this is not at all the final look of the interface, just a test run). I think that it might look really good when I introduce some volumetric materials in (cobblestone, wood, brass, etc).

From a technical standpoint, this is how the engine works:

- First, fonts are converted to bitmaps and layout data in an external tool, with some embedded information like the character code as a color (thus no support for Unicode yet, as this is a 0-255 value at the time).

- Normal and distance information is calculated for the bitmap font on a gpu and stored. Normals and distance are both used for the font beveling, and the font edges.

- Distance information is calculated using the anti-aliased version of the font...sort of like signed distance font rendering but not quite. This prevents weird artifacts (like the ones you would see doing a chisel bevel in Photoshop).

- Fonts are laid out using a dynamic mesh with enough buffer room to store some additional characters (i.e. dashes used on words that run over two lines). Thus there is no need to destroy/recreate the buffer unless the amount of text changes dramatically.

- A background is calculated (potentially based on the size of the string it contains), and draw with a single quad (rounded corners, or other types of corners, are calculated in the shader).

- A base image is rendered and extruded by stacking many quads, all within one batch without any shader changes. As it gets extruded, it uses the discard command to get rid of voxels that do not exist at the current height, thus avoiding transparency use which speeds up the process quite a bit. This process could just as well be done by rendering a volume within a single cube, it just depends on your needs. It can render maybe a 100 voxel layers on a slower machine in realtime. However I only do this process once until the scene changes again.

- Ambient occlusion is calculated in screen space.

- Lighting is calculated in realtime, with ray-cast shadows. Shadows use the distance from the last ray hit to make them look softer.

Anyway, I am traveling to Germany and Switzerland in a few hours, so I may not be able to answer questions right away. I will write more when I get time. :)

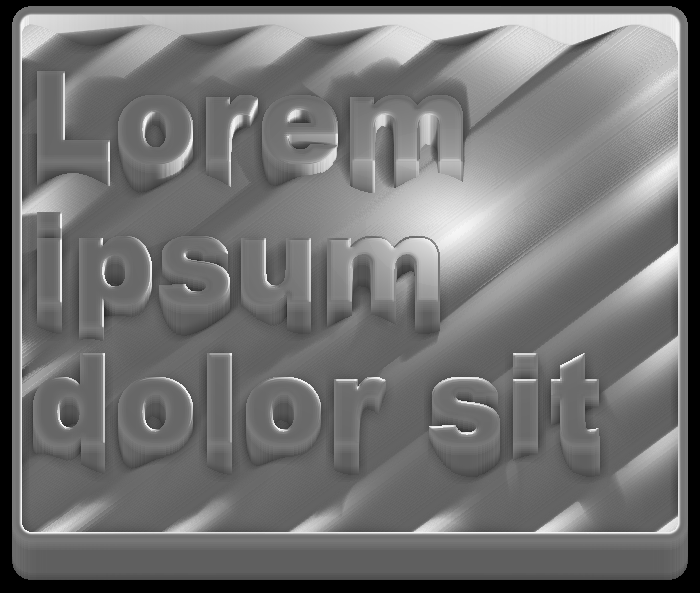

One last screenshot, just messing around with the voxels and using a sin wav on the background height:

RSS Feed

RSS Feed